EEMEFN: Low-Light Image Enhancement via Edge-Enhanced Multi-Exposure Fusion Network

EEMEFN: Low-Light Image Enhancement via Edge-Enhanced Multi-Exposure Fusion Network

Abstract

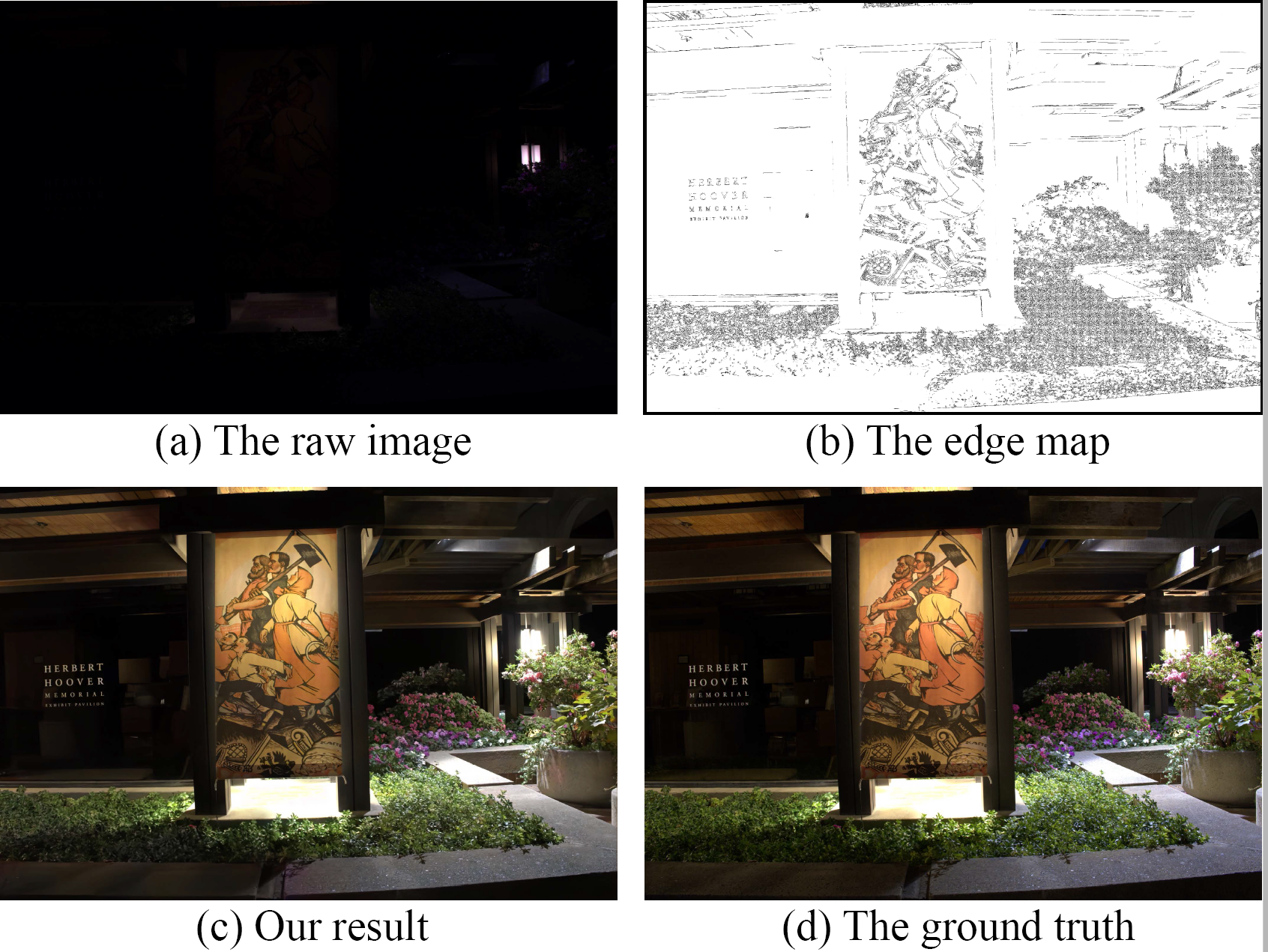

This work focuses on the extremely low-light image enhance-ment, which aims to improve image brightness and revealhidden information in darken areas. Recently, image enhance-ment approaches have yielded impressive progress. However,existing methods still suffer from three main problems: (1)low-light images usually are high-contrast. Existing methodsmay fail to recover images details in extremely dark or brightareas; (2) current methods cannot precisely correct the colorof low-light images; (3) when the object edges are unclear,the pixel-wise loss may treat pixels of different objectsequally and produce blurry images. In this paper, we proposea two-stage method called Edge-Enhanced Multi-ExposureFusion Network (EEMEFN) to enhance extremely low-lightimages. In the first stage, we employ a multi-exposure fusionmodule to address the high contrast and color bias issues. Wesynthesize a set of images with different exposure time froma single image and construct an accurate normal-light imageby combining well-exposed areas under different illuminationconditions. Thus, it can produce realistic initial images withcorrect color from extremely noisy and low-light images.Secondly, we introduce an edge enhancement module torefine the initial images with the help of the edge information.Therefore, our method can reconstruct high-quality imageswith sharp edges when minimizing the pixel-wise loss. Ex-periments on the See-in-the-Dark dataset indicate that ourEEMEFN approach achieves state-of-the-art performance.